hdinsight.github.io

How do I configure Spark application through Jupyter notebook on HDInsight clusters?

Issue:

Need to configure the amount of memory and number of cores that a Spark application can use when using Jupyter notebook on HDInsight clusters.

-

Refer to the topic Why did my Spark application fail with OutOfMemoryError? to determine which Spark configurations need to be set and to what values.

-

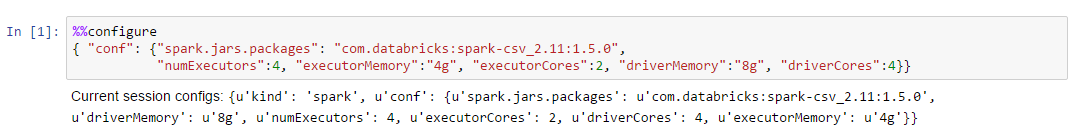

Specify the Spark configurations in valid JSON format in the first cell of the Jupyter notebook after the %%configure directive (change the actual values as applicable):