hdinsight.github.io

How do I configure Spark application through Ambari on HDInsight clusters?

Issue:

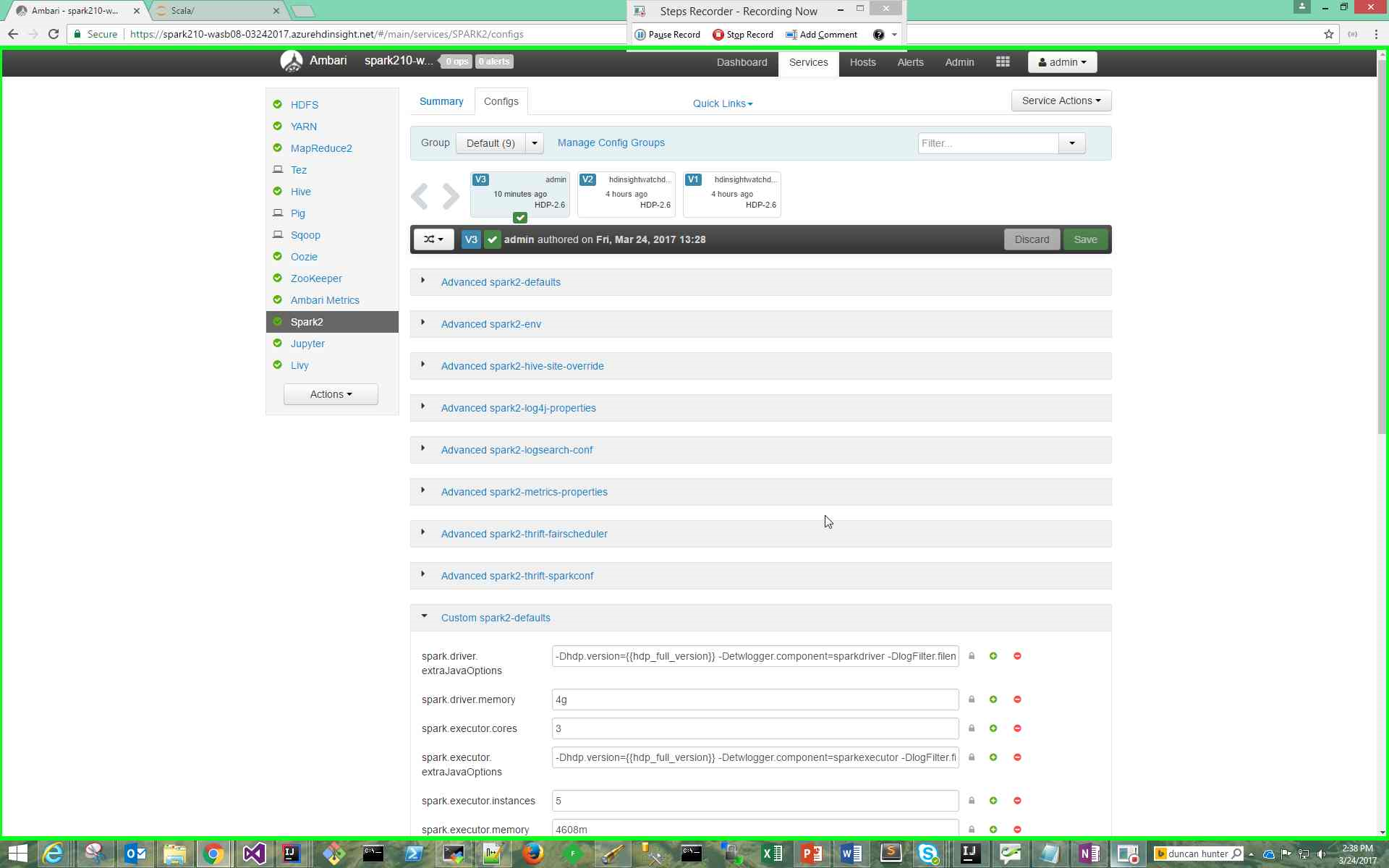

Need to configure in Ambari the amount of memory and number of cores that a Spark application can use.

Resolution Steps:

-

Refer to the topic Why did my Spark application fail with OutOfMemoryError? to determine which Spark configurations need to be set and to what values.

-

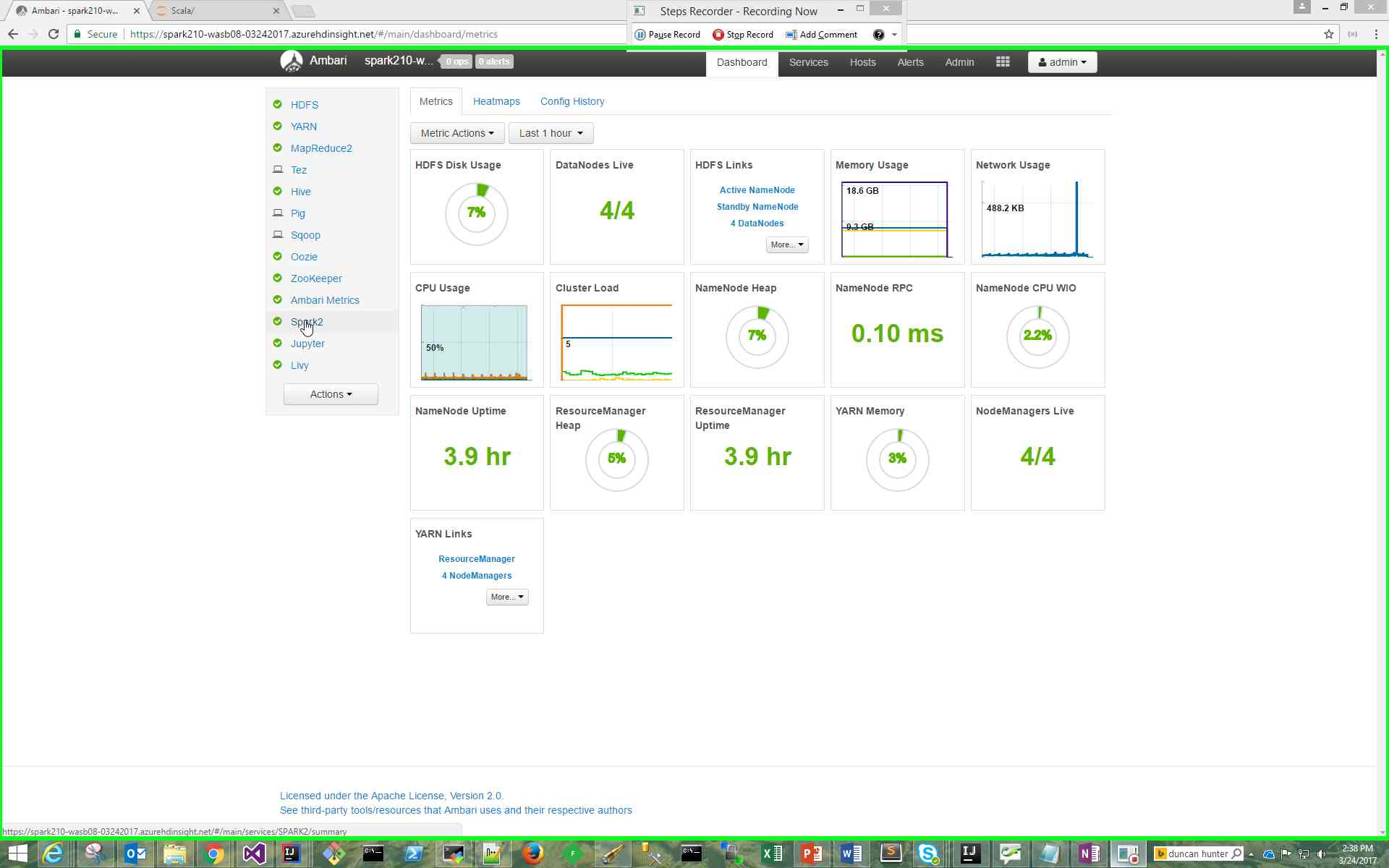

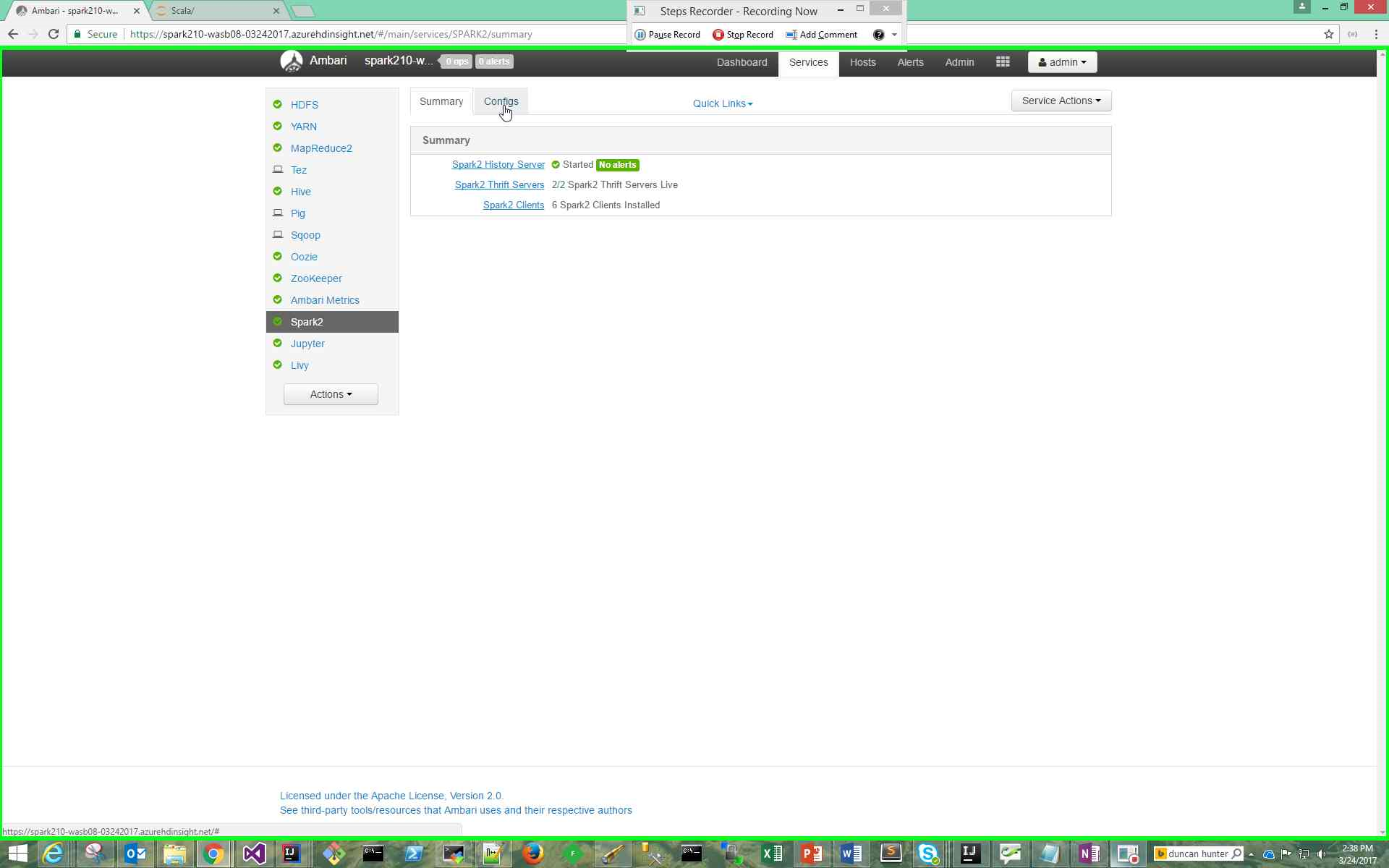

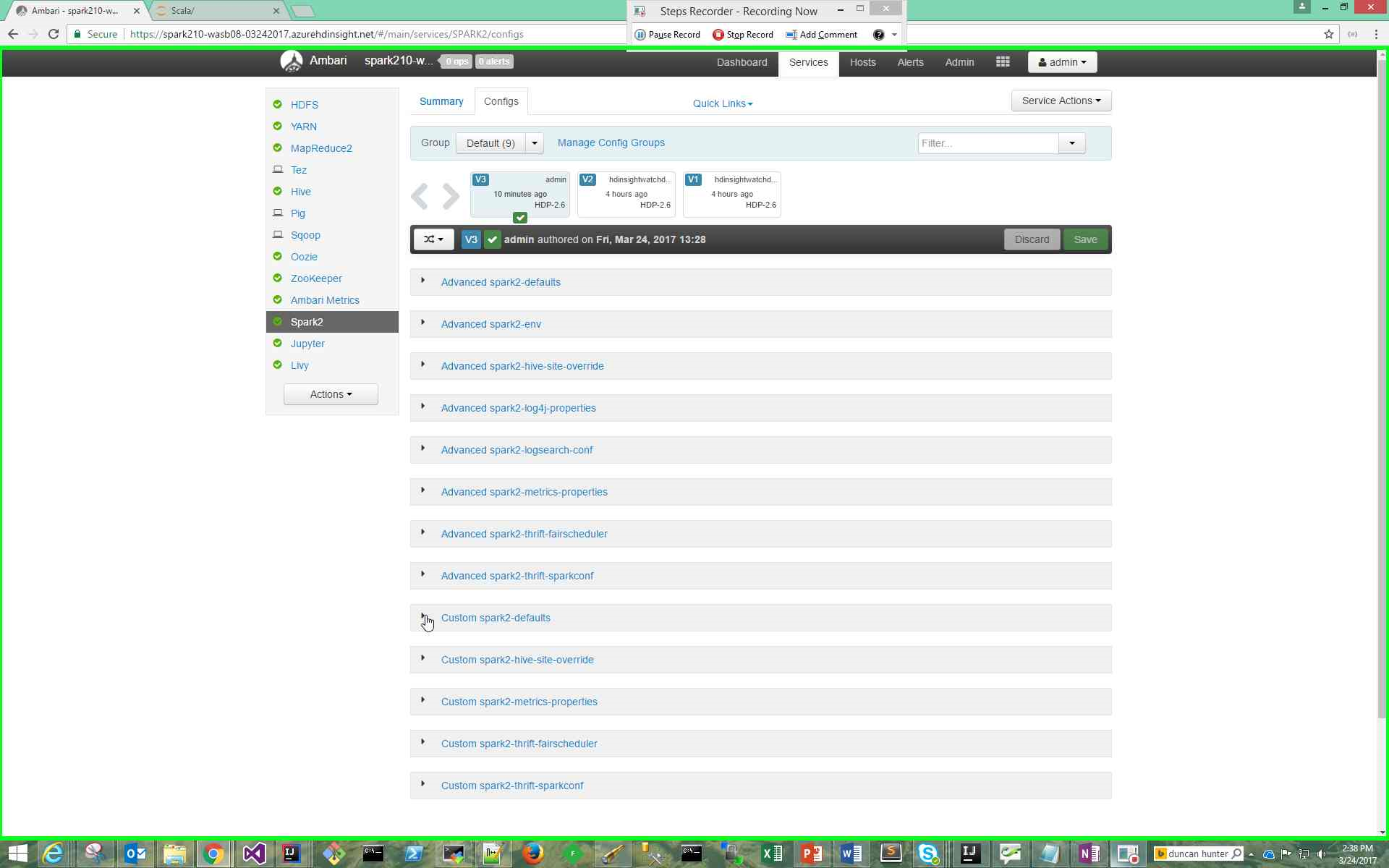

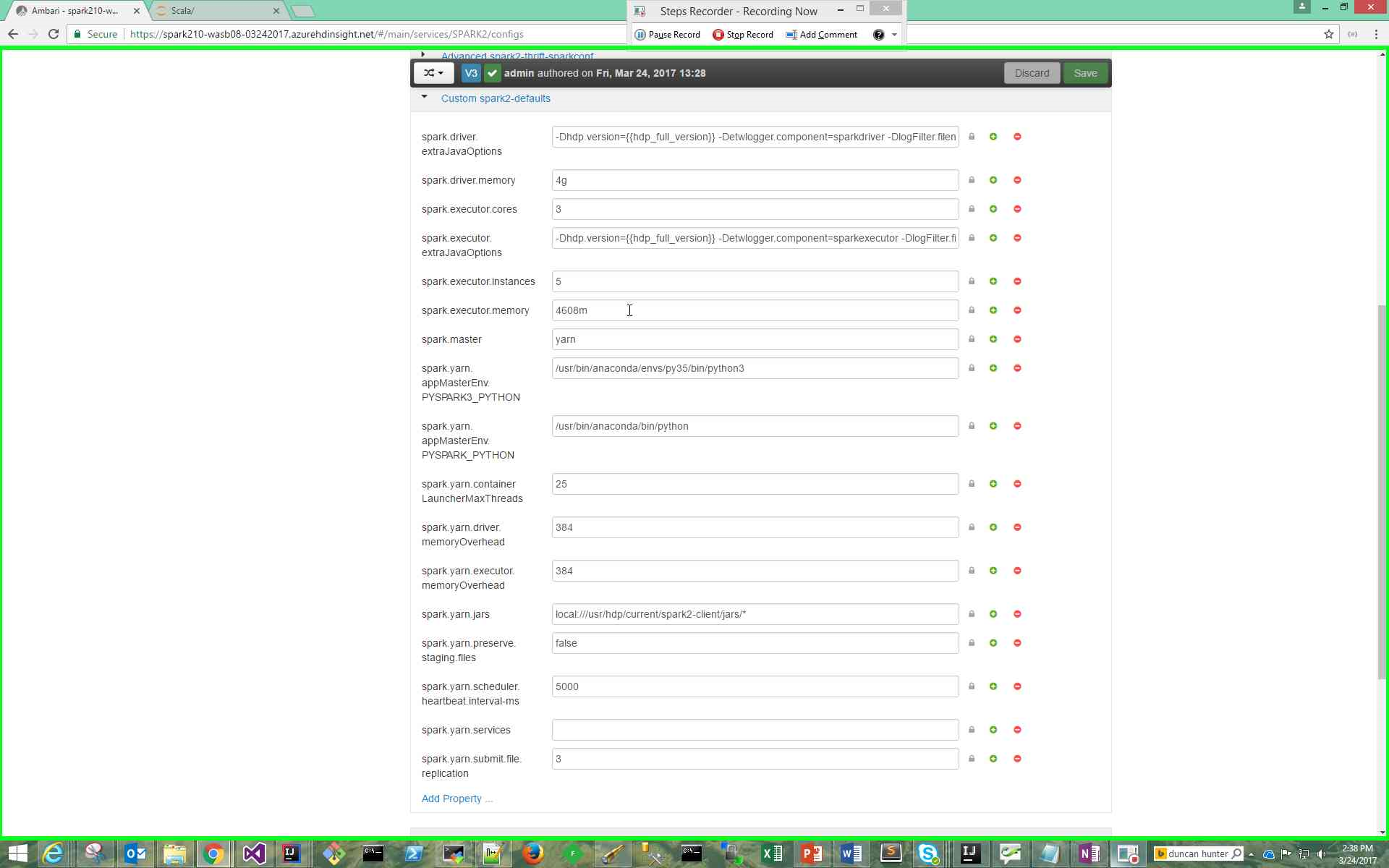

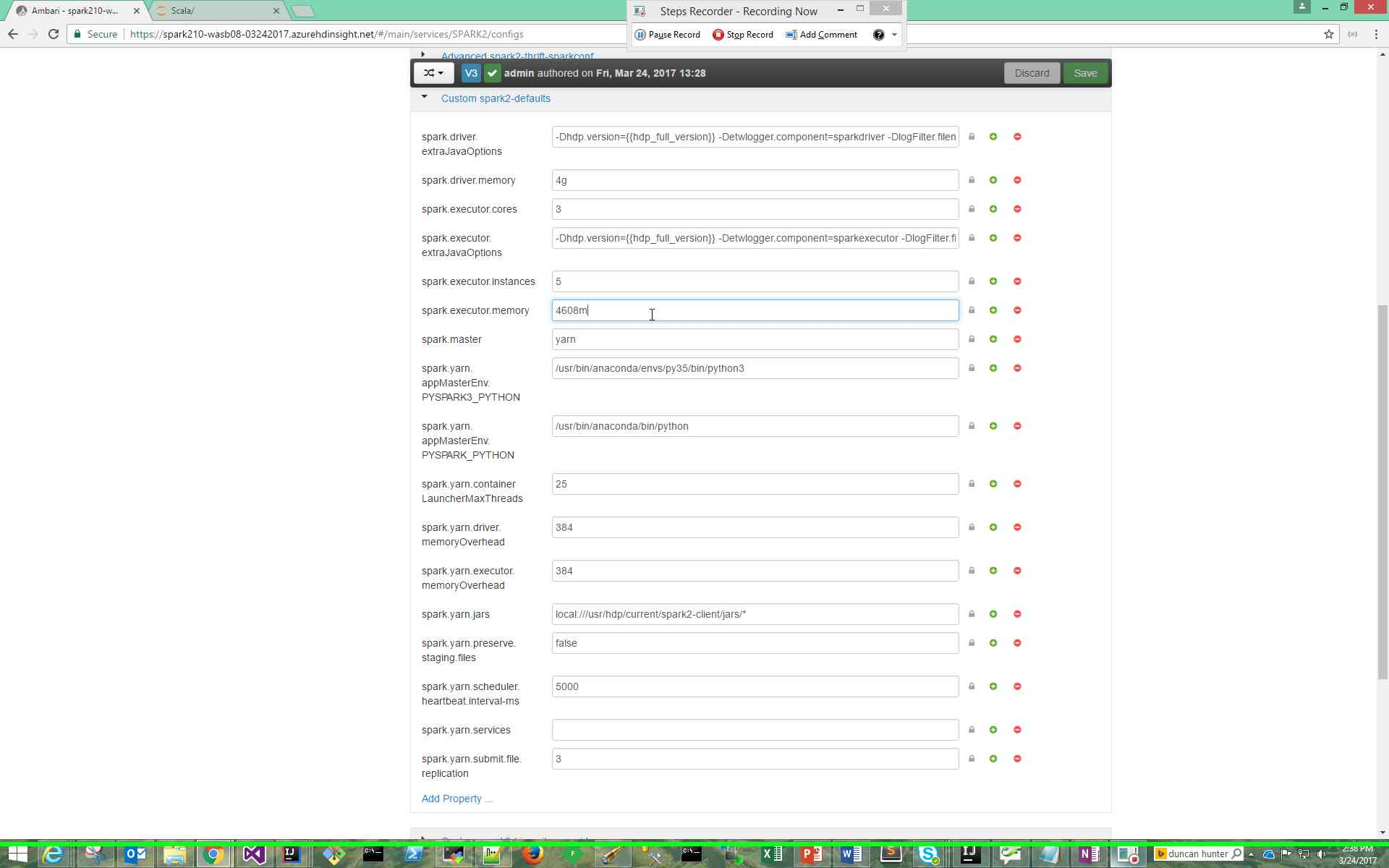

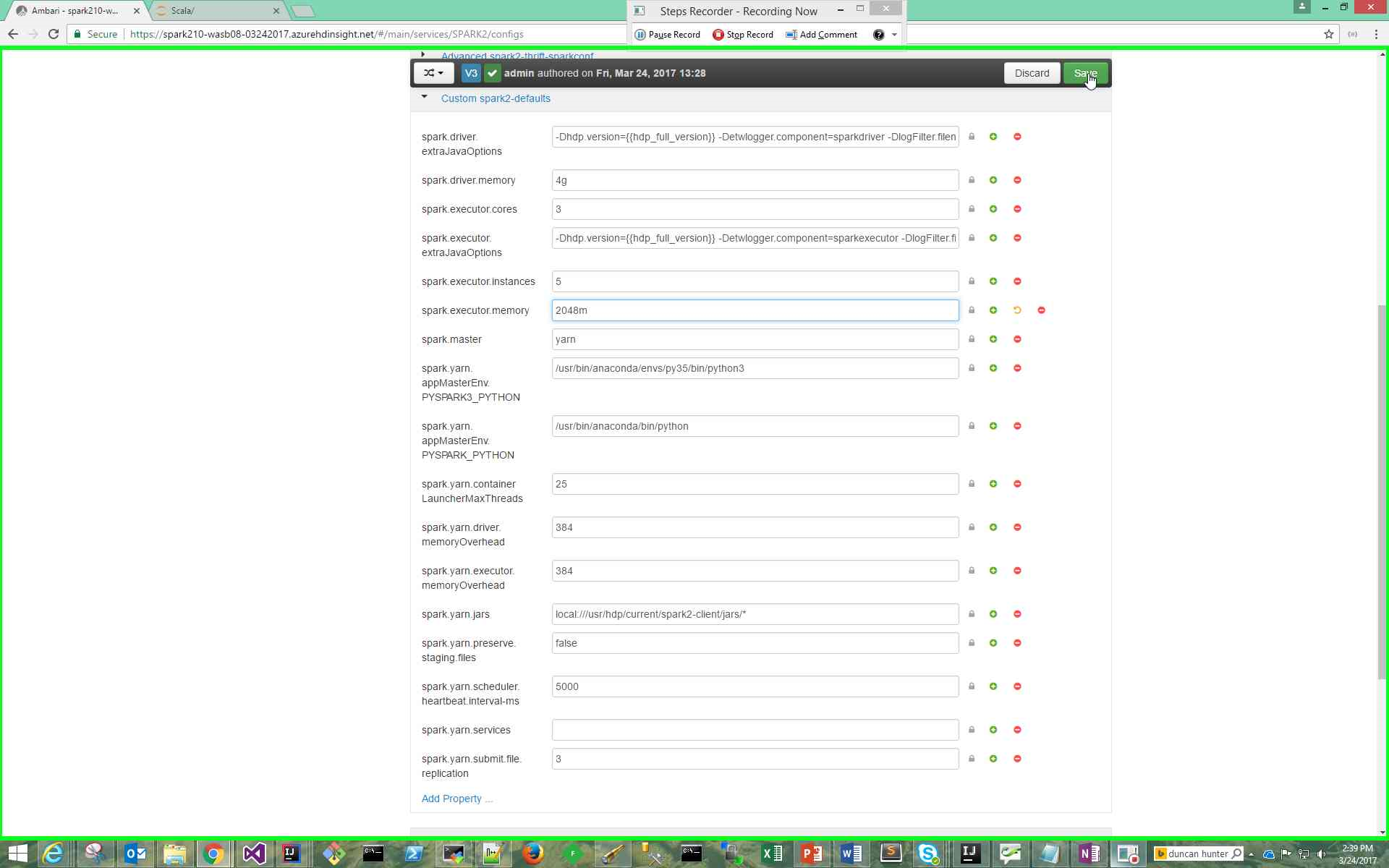

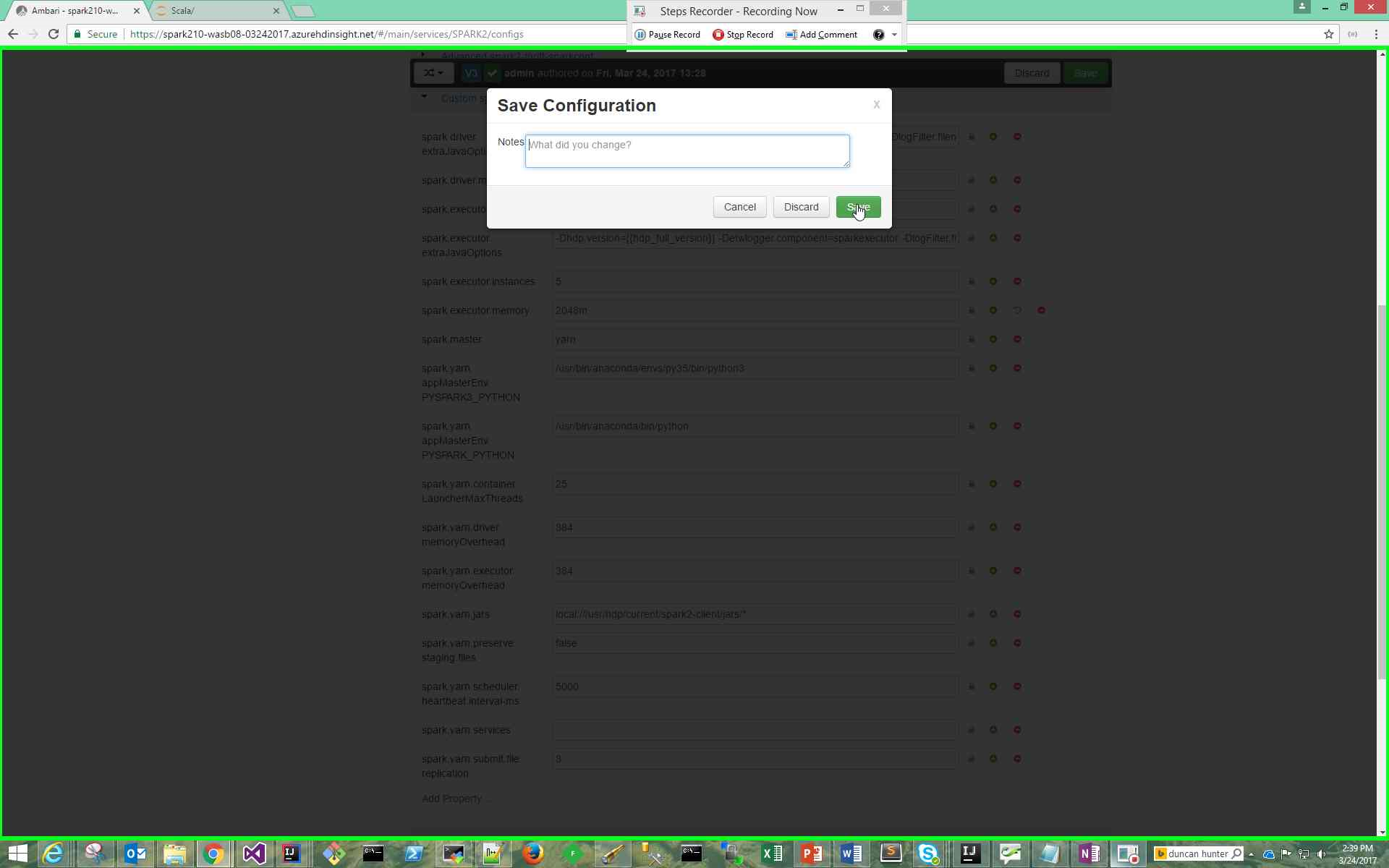

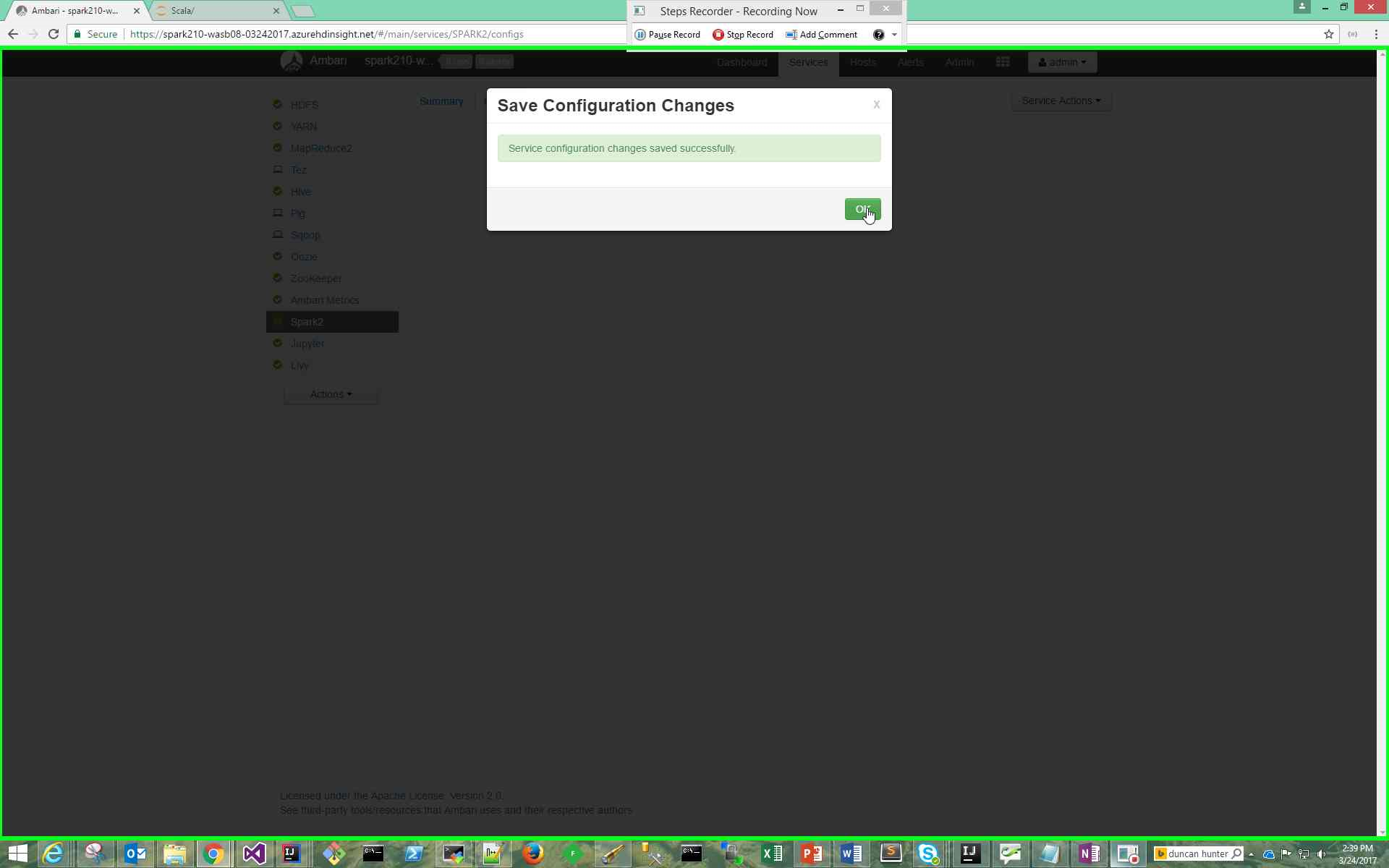

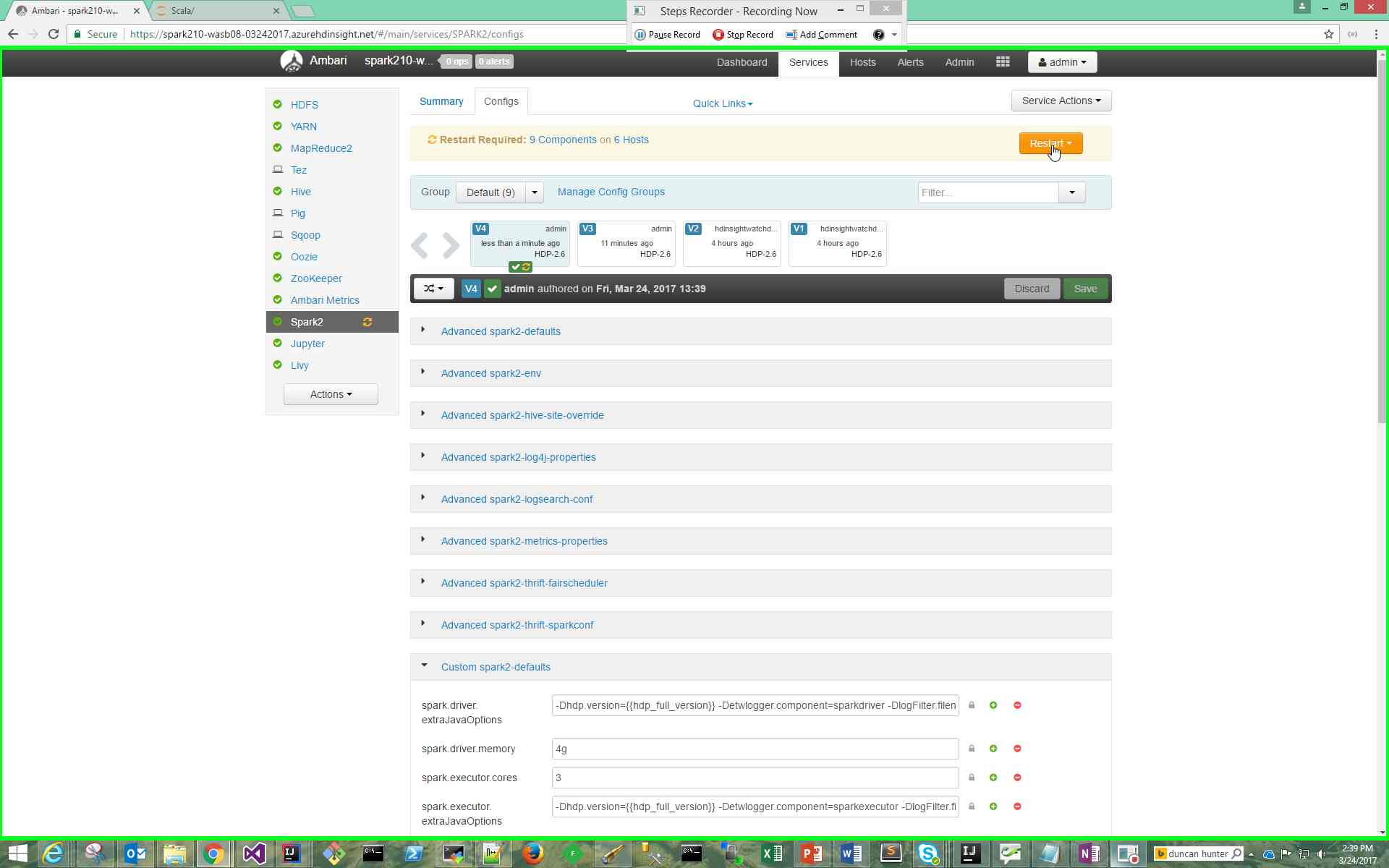

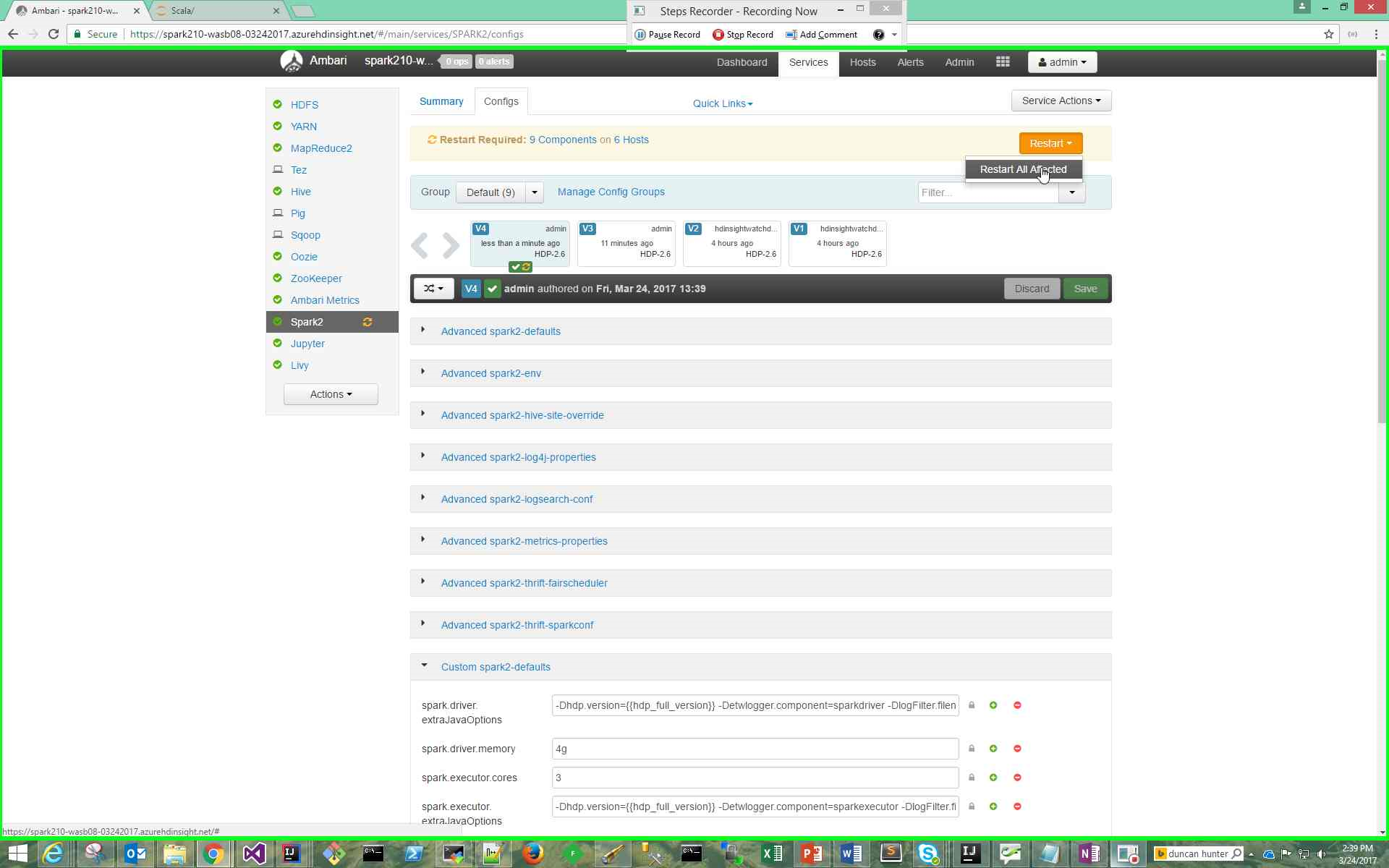

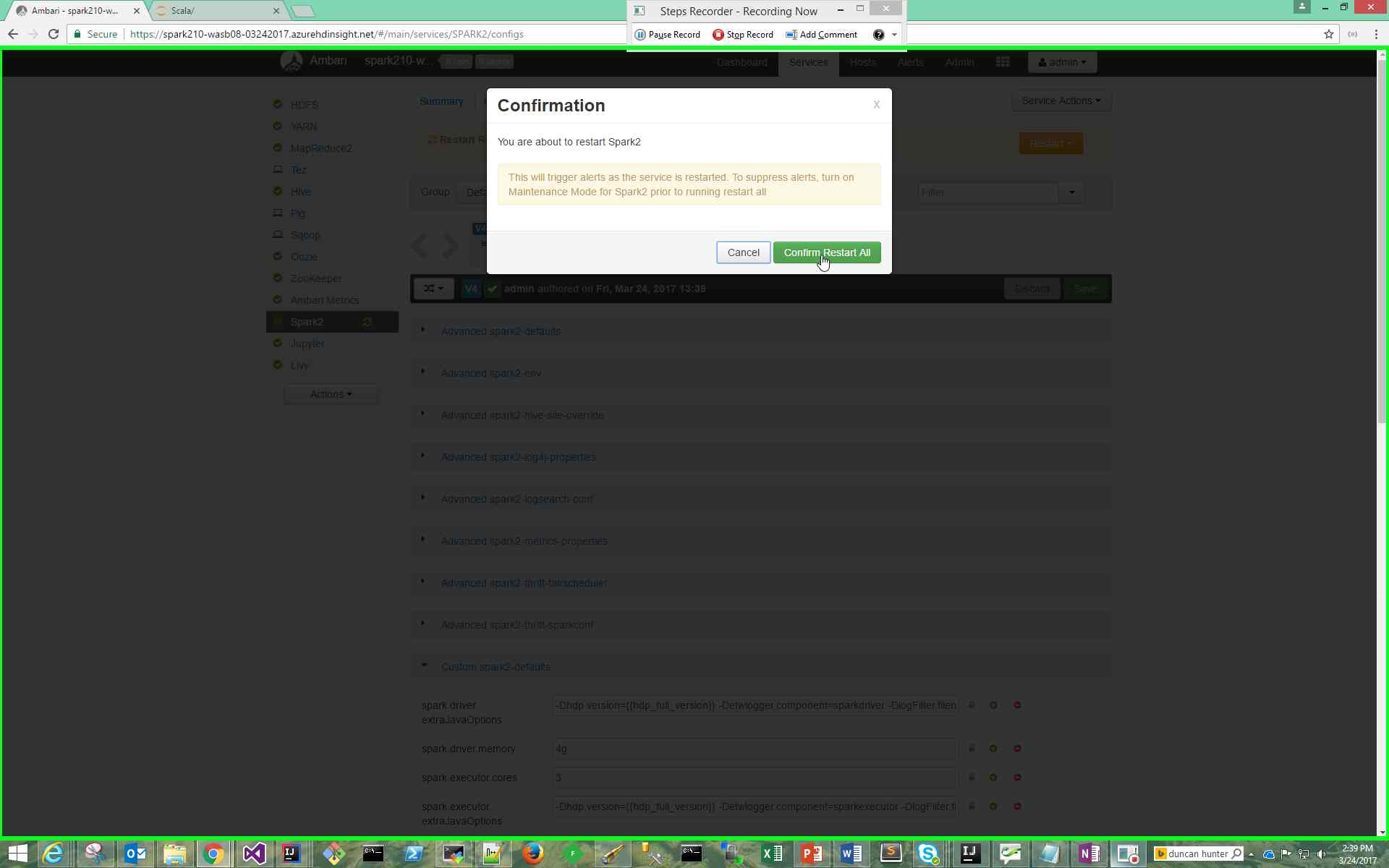

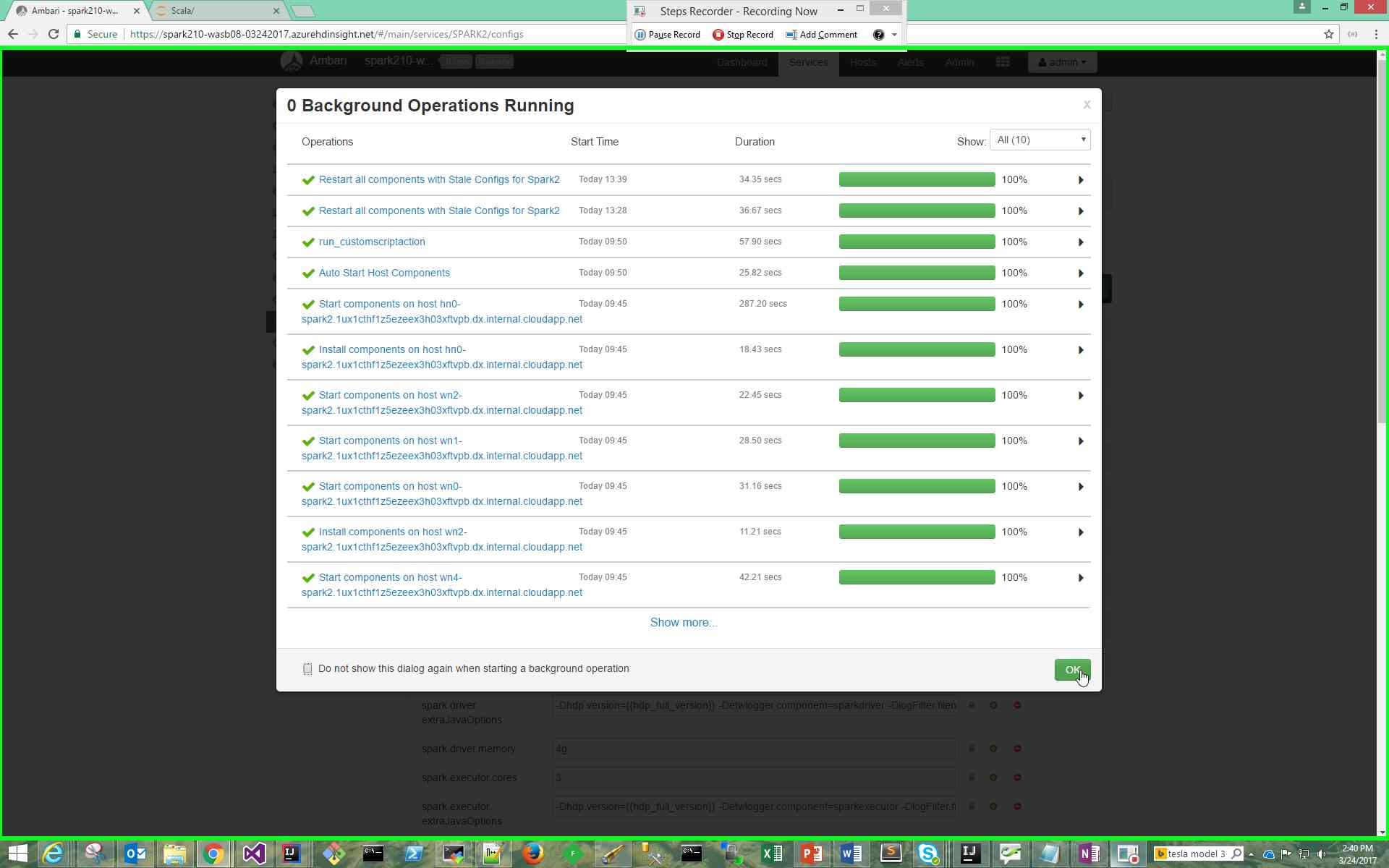

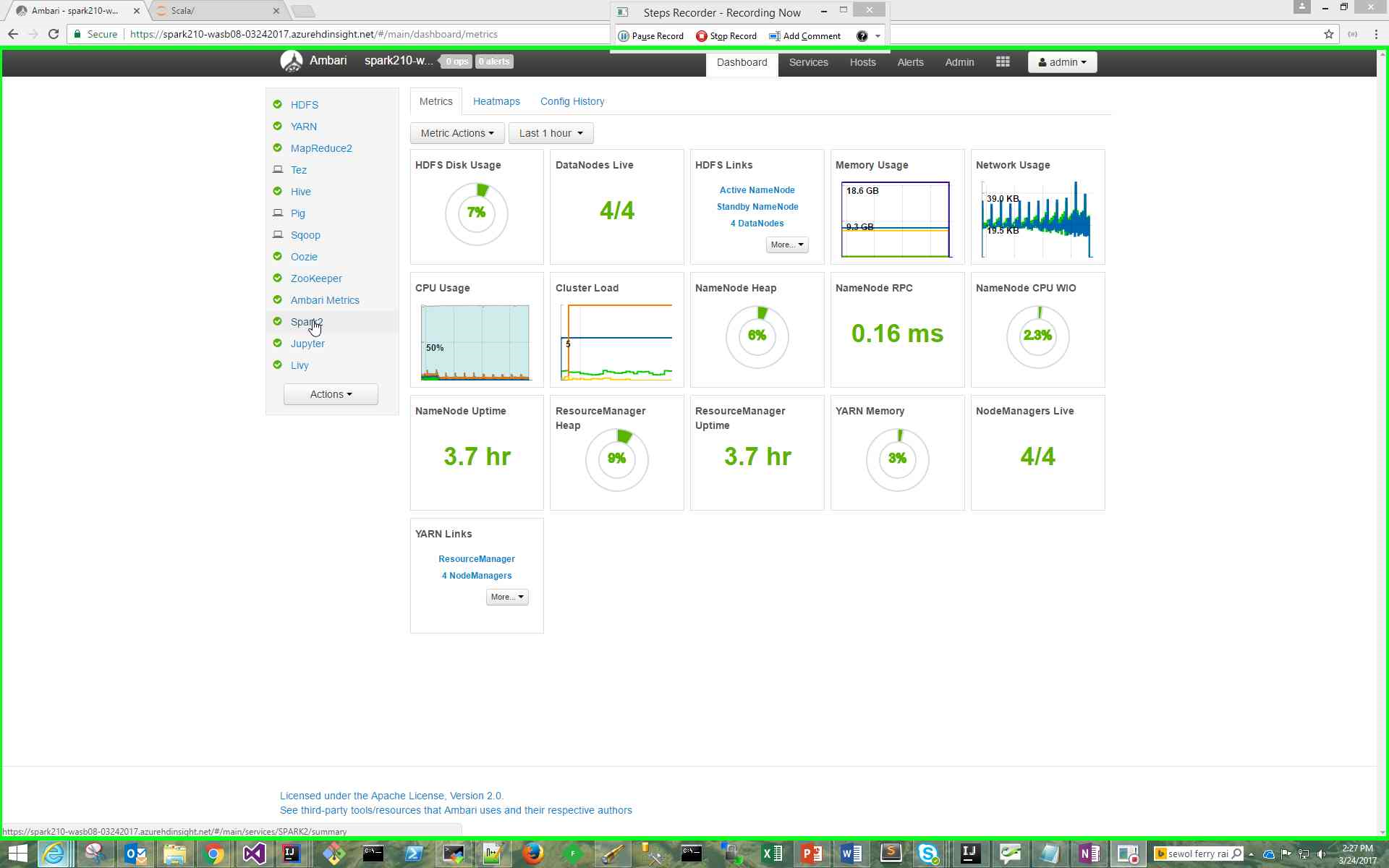

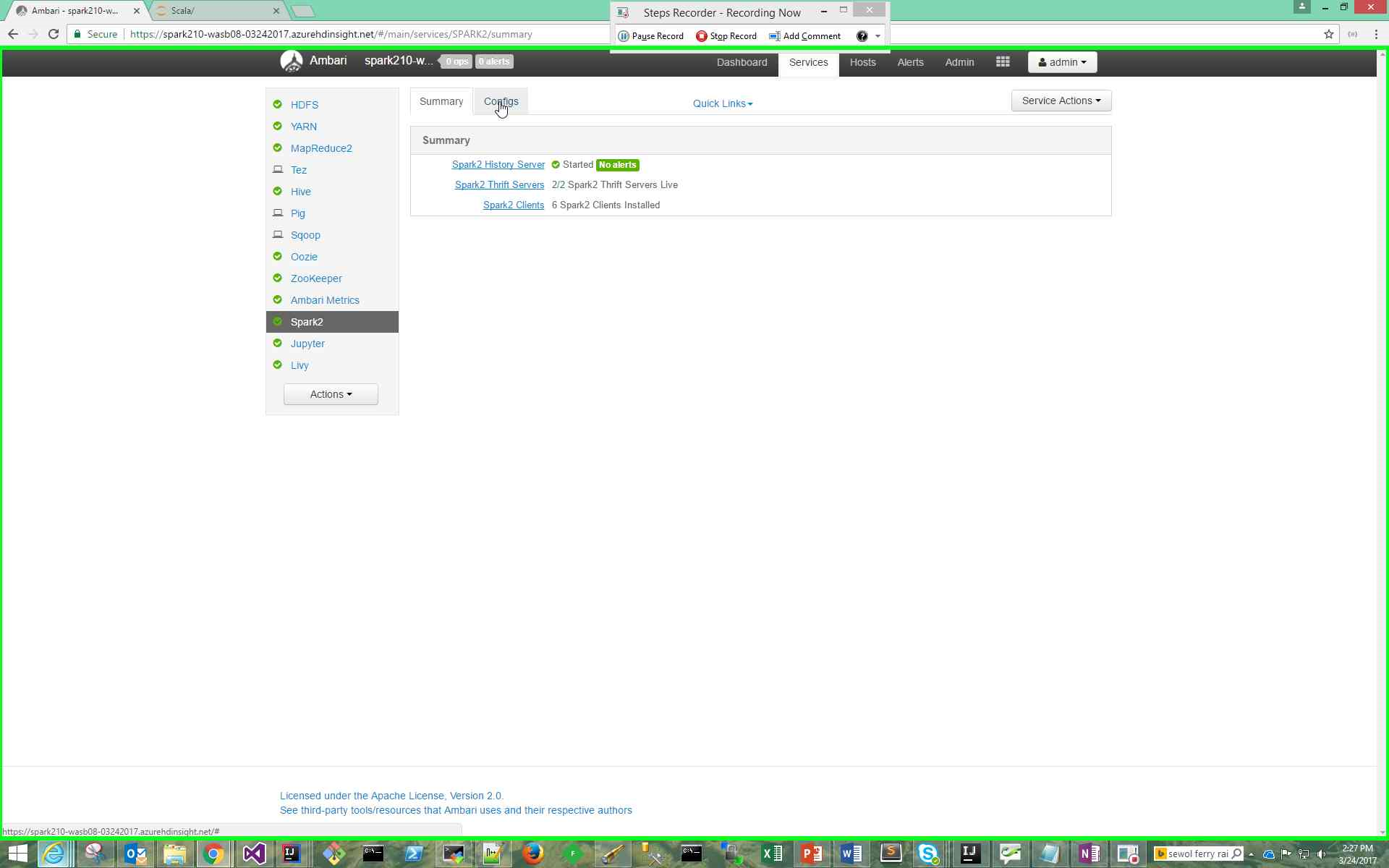

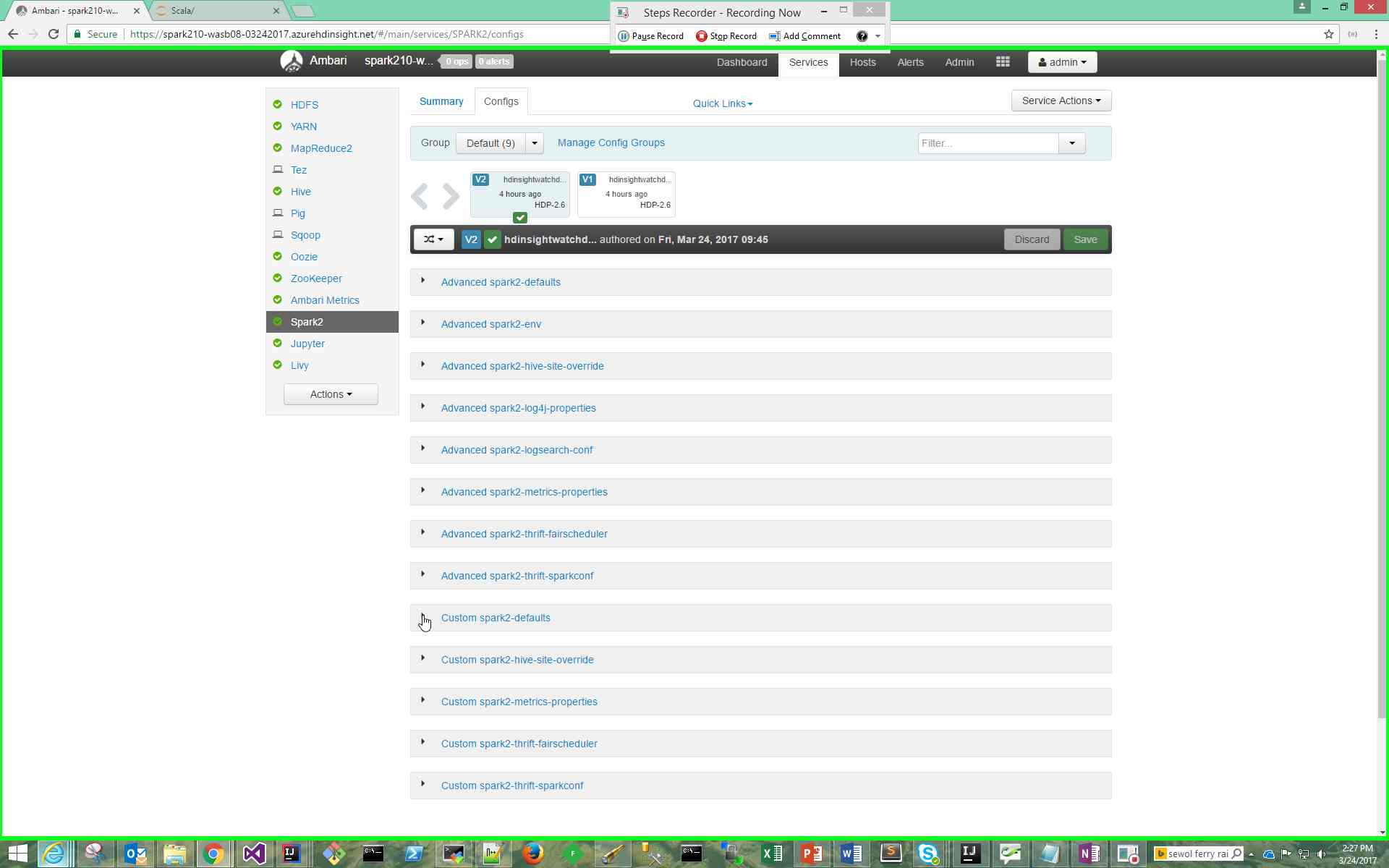

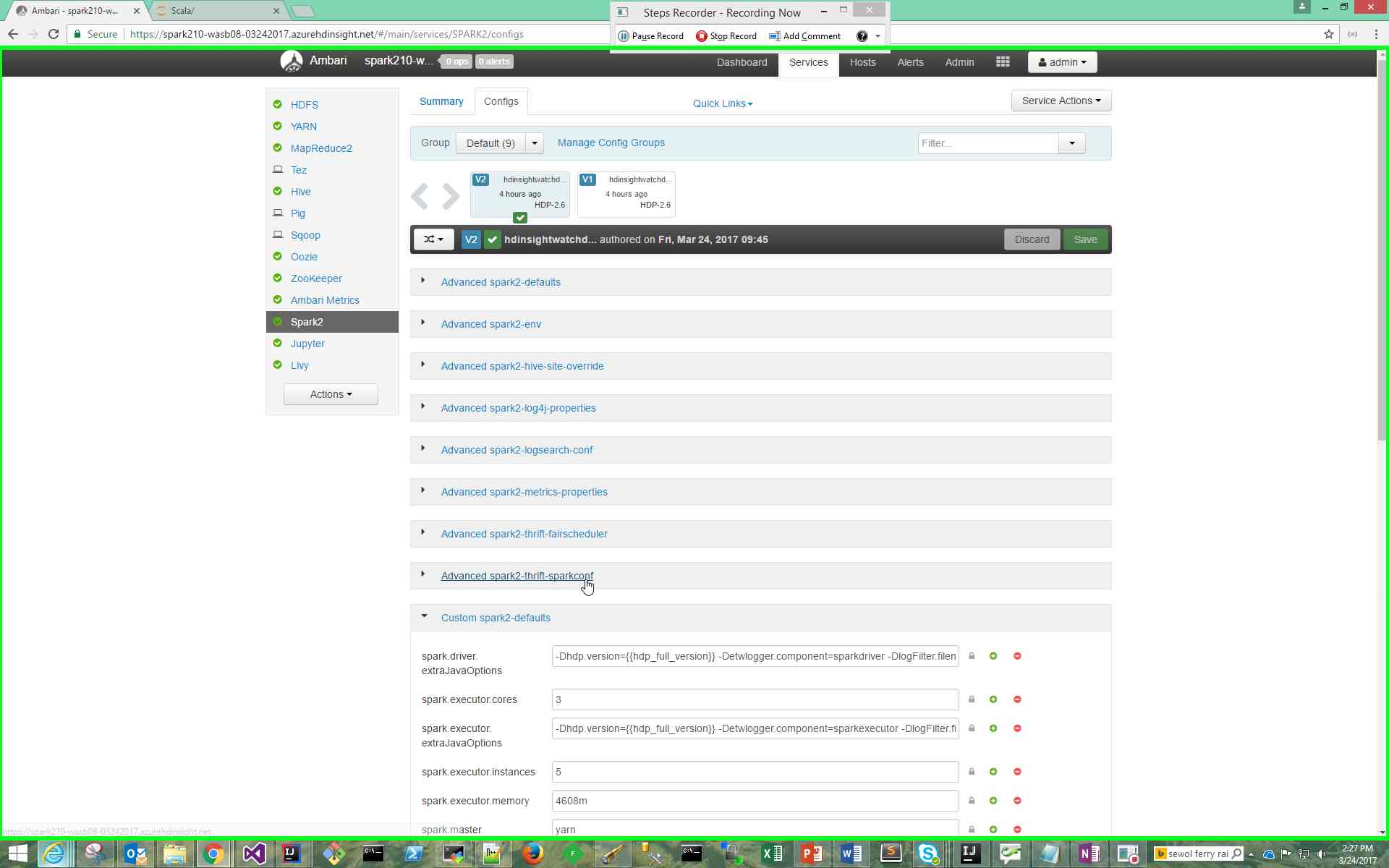

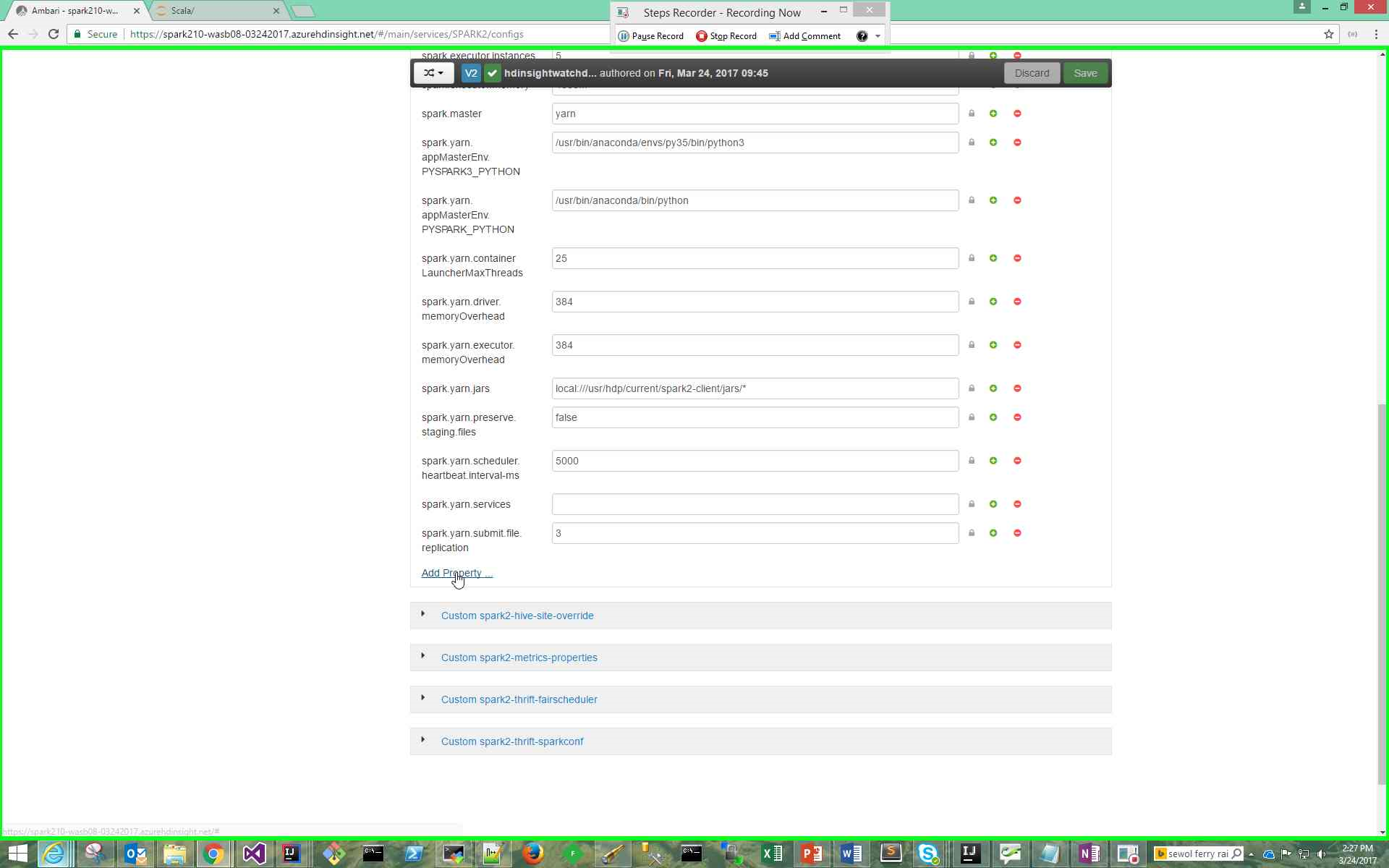

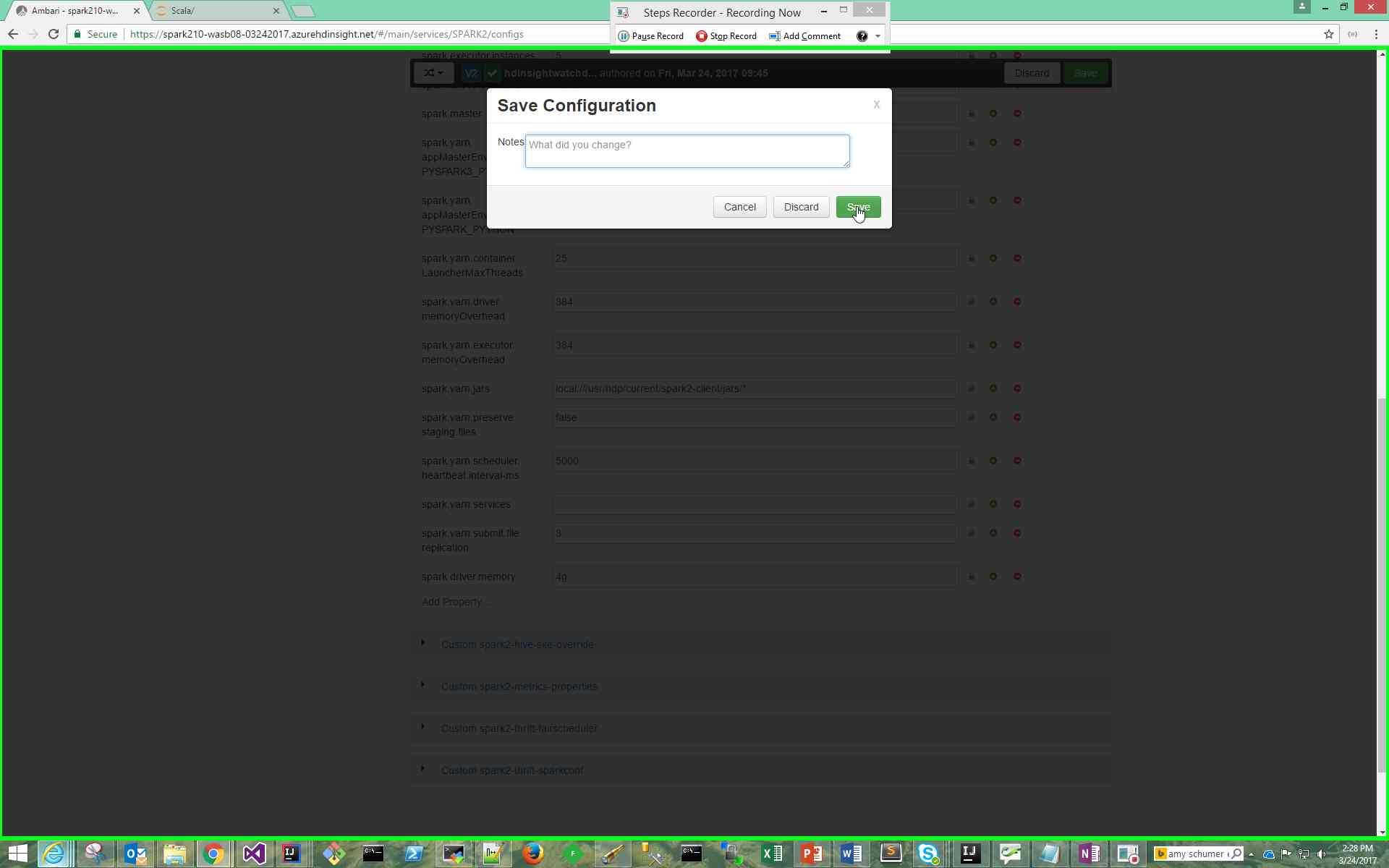

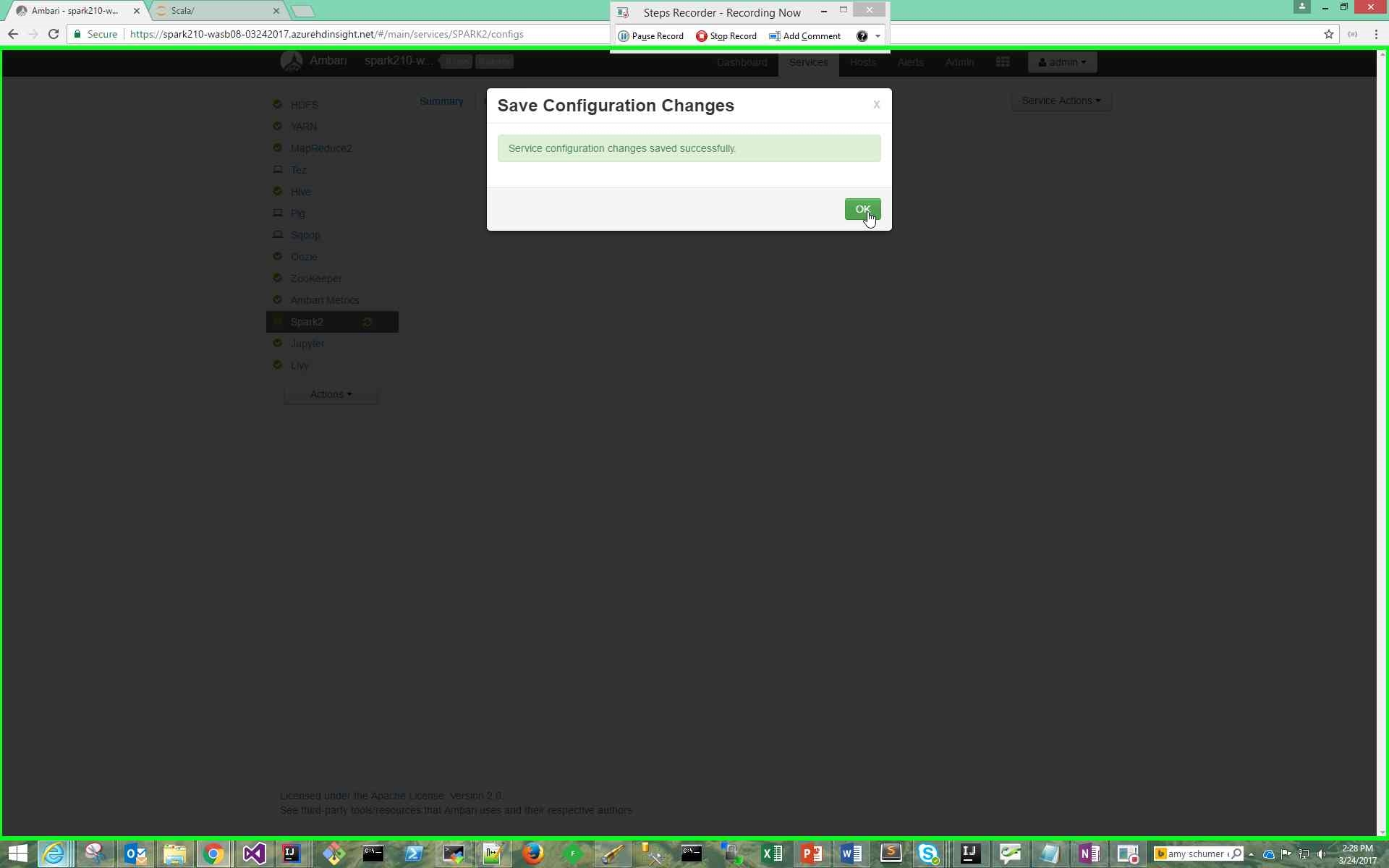

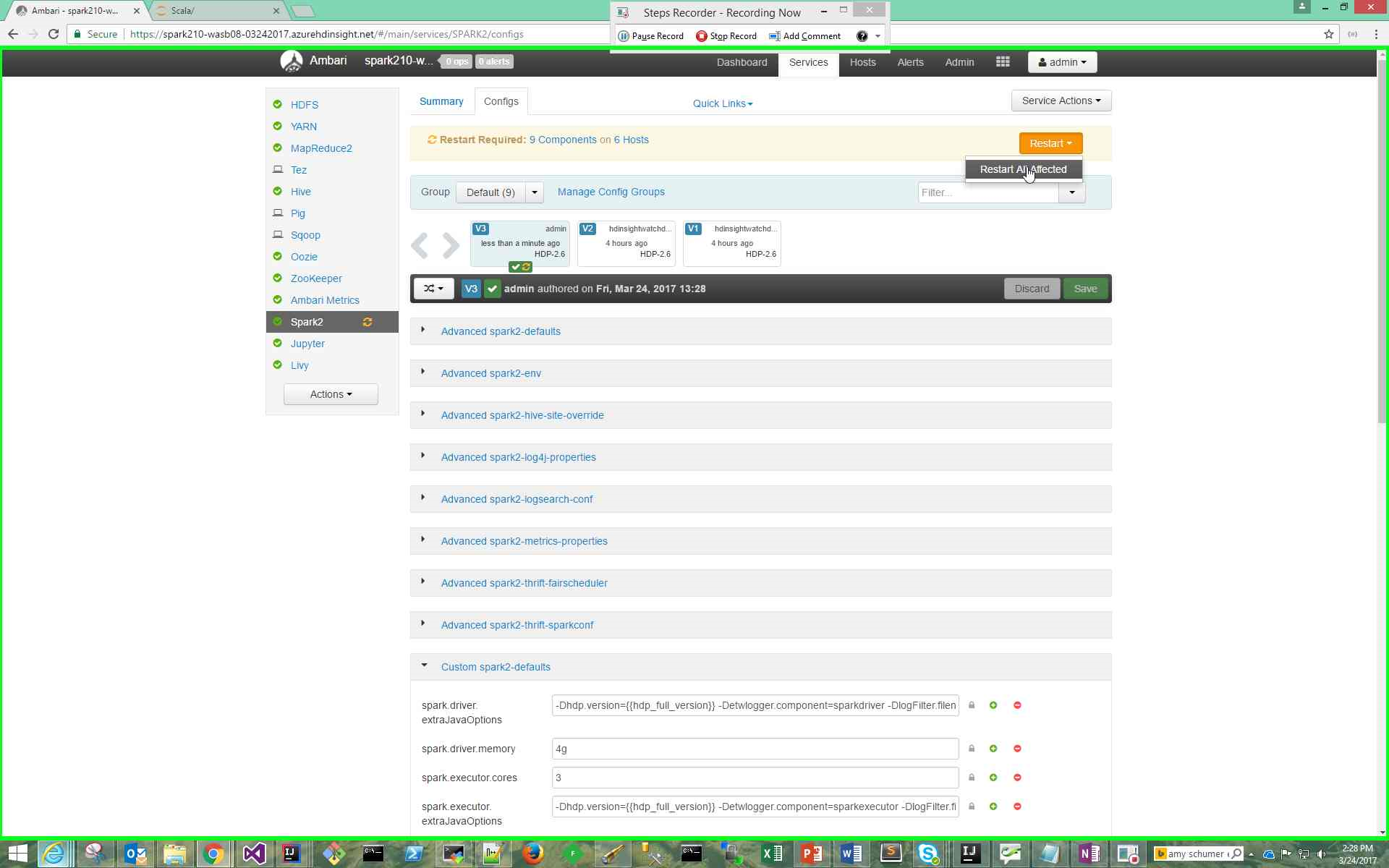

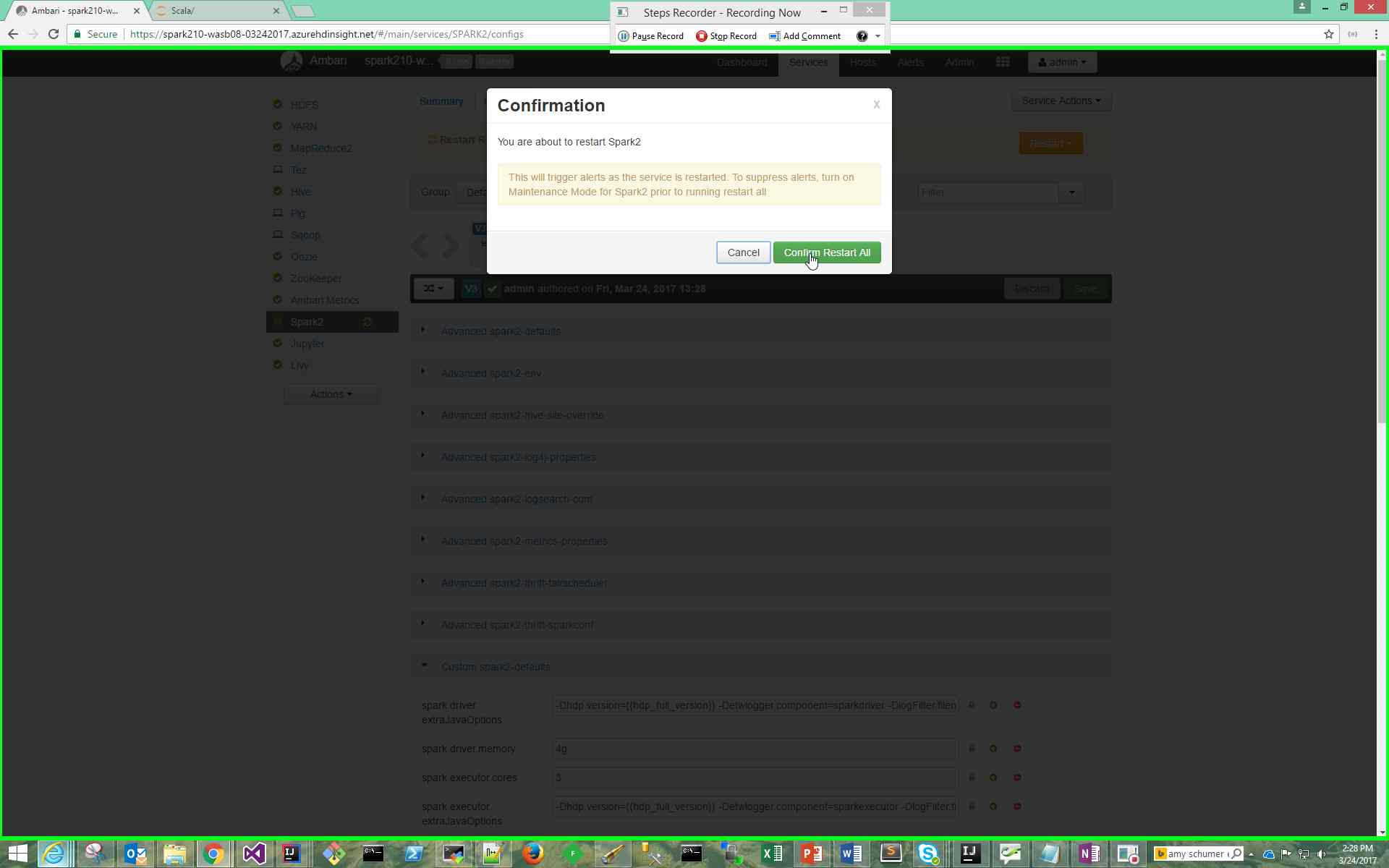

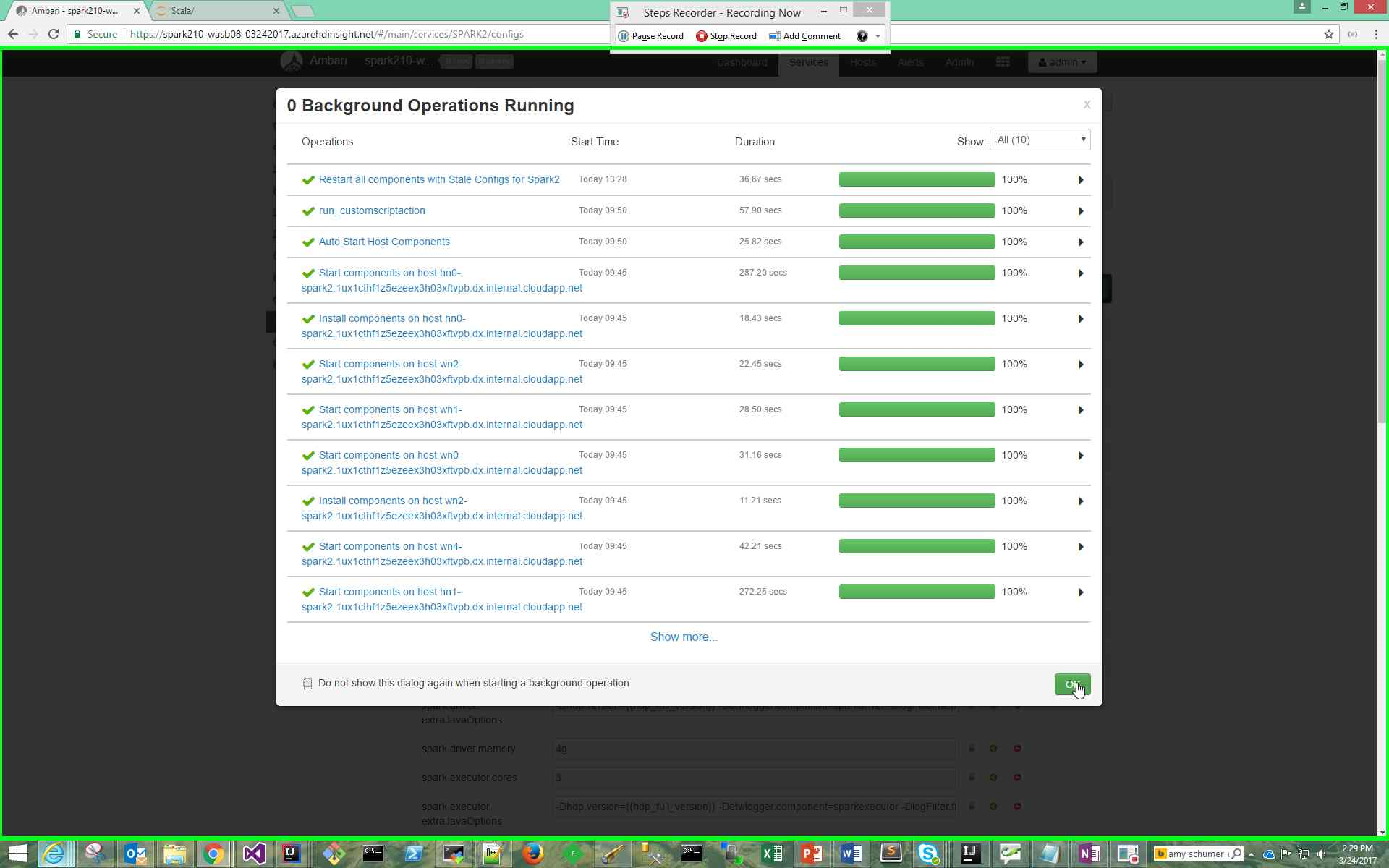

Update configurations whose values are already set in the HDInsight Spark clusters with the following steps:

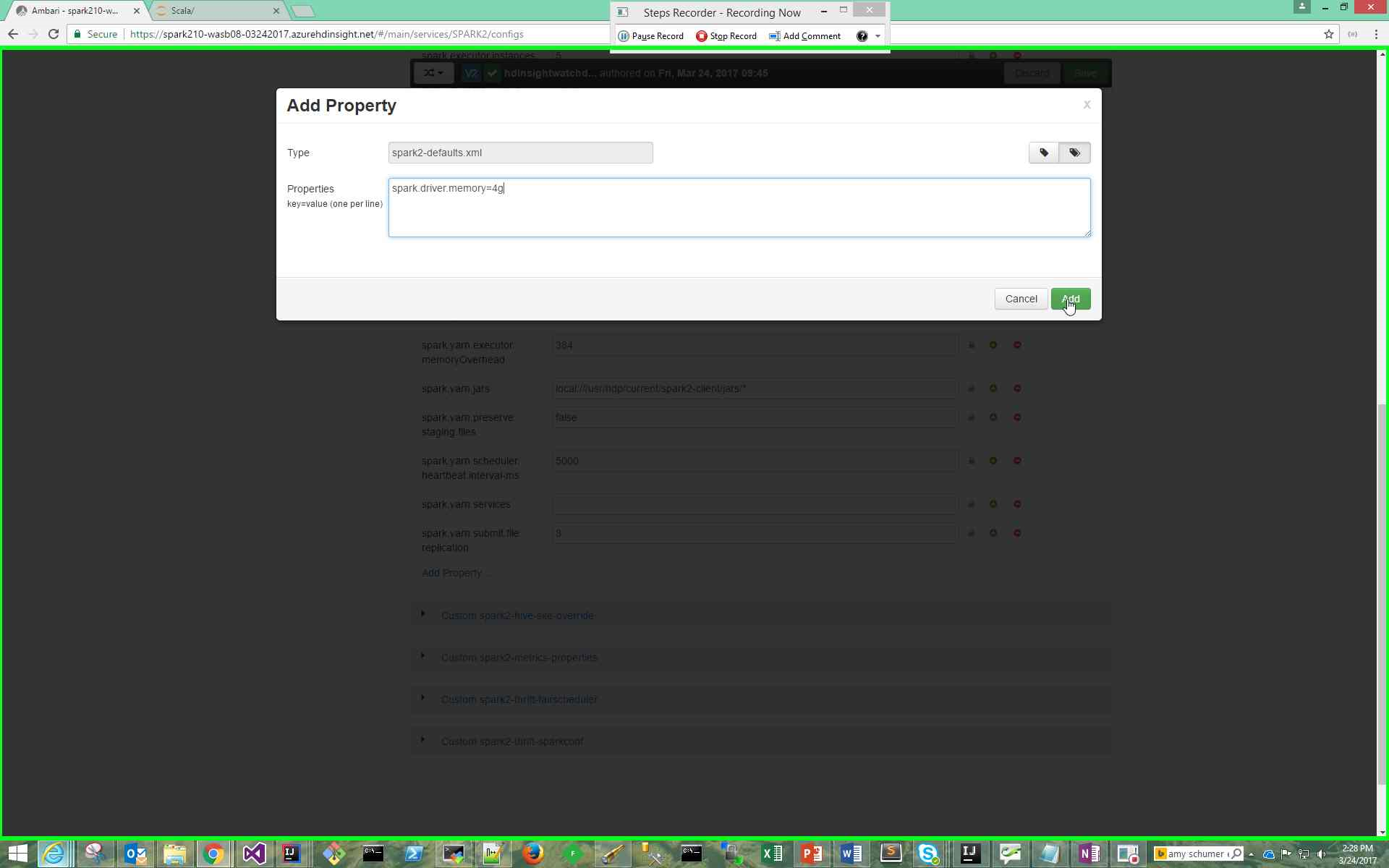

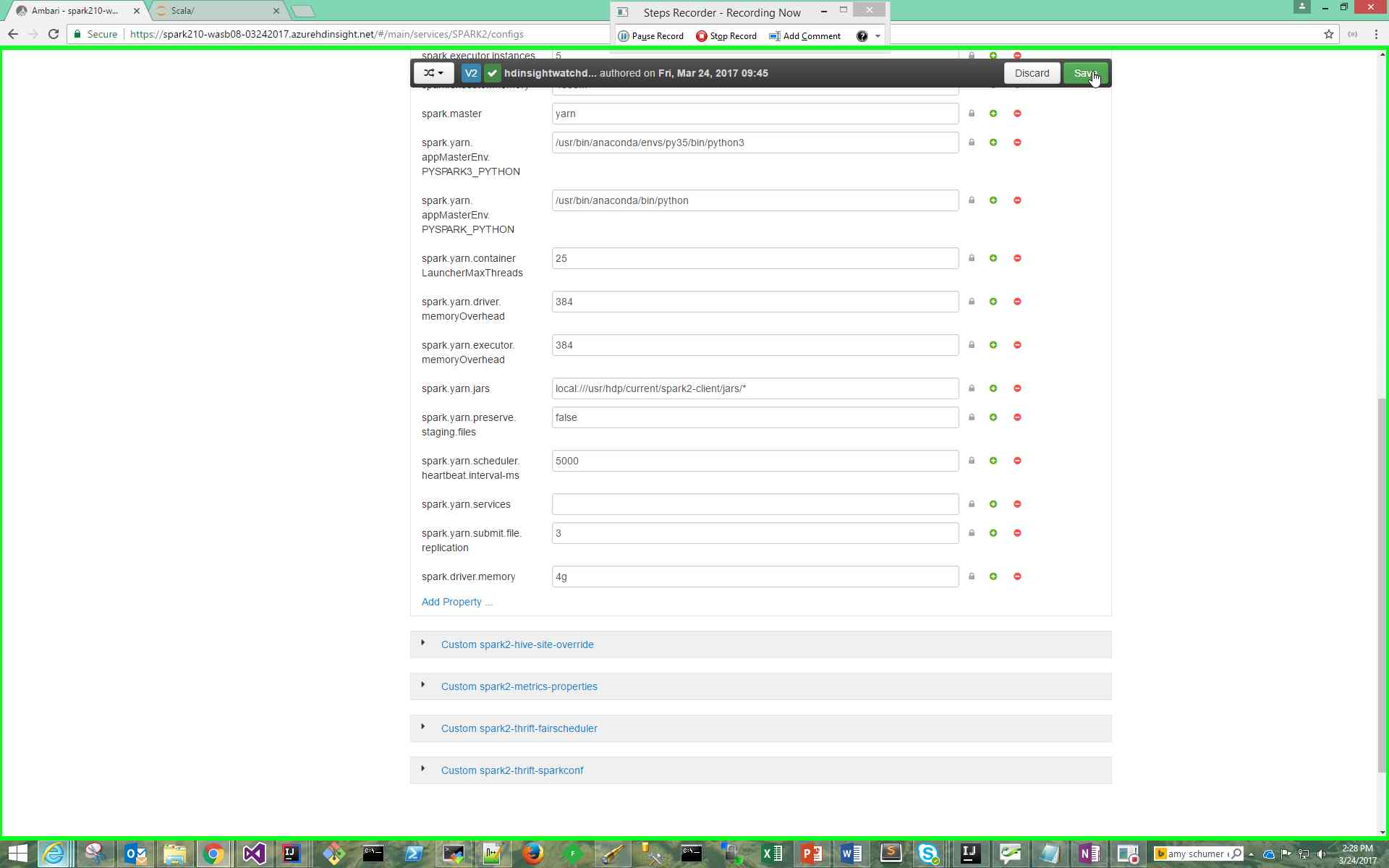

- Add configurations whose values are not set in the HDInsight Spark clusters with the following steps:

Note: These changes are cluster wide but can be overridden at actual Spark job submission time.